AI Suspected in 150,000 Academic Abstracts Annually, Detection Tools Struggle to Keep Up

July 31, DailyNewspapers.in — According to a recent report by the prestigious academic journal Nature, the use of generative AI in academic writing has surged dramatically. A new study suggests that roughly 10% of biomedical paper abstracts on PubMed—the largest database in the field—show signs of AI-generated content. That translates to approximately 150,000 papers each year involving AI assistance.

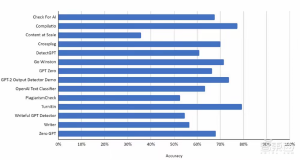

Researchers at the Berlin University of Applied Sciences have found that most leading AI-generated content (AIGC) detection tools average only about 50% accuracy and often flag human-written content as AI-generated. Conversely, AI-generated texts can easily bypass detection with techniques like paraphrasing and synonym substitution. Notably, AI-generated work by native English speakers is even harder to detect.

AI Behind 150,000 Abstracts Annually, Non-Native Writers More Likely Detected

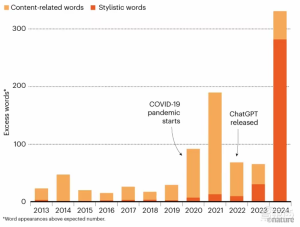

Since the release of ChatGPT in late 2022, generative AI tools have exploded in popularity across academic fields. A study by the University of Tübingen in Germany revealed that, during the first half of 2024, at least 10% of biomedical abstracts were written with the help of AI—an annual equivalent of 150,000 papers.

Analyzing 14 million abstracts from 2010 to 2024 in PubMed, the team noted a sharp rise in certain stylistic words often used by AI systems like ChatGPT. Based on the frequency of these terms, they estimated the proportion of abstracts generated by AI.

Interestingly, the use of AI in writing varied by country. Papers from nations like China and South Korea showed higher usage rates compared to English-speaking countries. However, the researchers believe native English-speaking authors may use AI just as frequently—but in ways that evade detection.

Before the generative AI boom, artificial intelligence was already widely used in fields like drug discovery and protein structure prediction. These applications were generally accepted since AI served as a supporting tool rather than a content creator.

Generative AI now raises two major concerns in academic writing: plagiarism and copyright infringement. AI tools can paraphrase published research in an academic tone, making the new content hard to flag as plagiarized. In addition, AI models trained on copyrighted data sometimes reproduce it verbatim. The New York Times even sued OpenAI after ChatGPT allegedly repeated its content without attribution.

Detection Tools Struggle in Cat-and-Mouse Game

In response to the growing use of generative AI, several companies have released AIGC detection tools—but these tools have largely failed to keep pace.

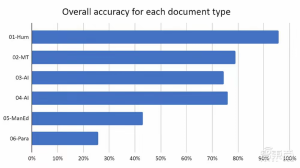

A study by Berlin University of Applied Sciences found that only five of the 14 commonly used detection tools achieved over 70% accuracy. On average, the tools scored between 50% and 60% accuracy.

Their performance dropped further when detecting AI-generated content that had been edited or rephrased. With simple techniques like synonym replacement and sentence restructuring, detection accuracy fell below 50%. The study concluded that the overall detection effectiveness of these tools was limited.

Ironically, detection tools are more likely to misidentify original work translated from another language as AI-generated. This creates reputational risks for non-native English-speaking researchers and students who rely on translation tools to publish their work.

Blurred Lines Between Assistance and Misconduct

Despite concerns, generative AI has been a valuable tool for many scholars. Hend Al-Khalifa, an IT researcher at King Saud University in Riyadh, said that AI tools have helped her colleagues—who struggle with English—focus on research rather than language barriers.

However, the boundary between AI-assisted writing and academic misconduct is blurry. Soheil Feizi, a computer scientist at the University of Maryland, argues that using AI to paraphrase existing papers is clearly plagiarism.

That said, using AI to express one’s own ideas or to refine drafts should not be penalized—especially if authors disclose their use of AI tools. Many journals now require full transparency, including which AI systems and prompts were used. Authors are also held responsible for the content’s accuracy and originality.

Top journals have implemented policies around AI use. Science, for example, prohibits listing AI tools as co-authors but allows their use with clear disclosure. Nature requires that AI use be described in the “Methods” section of papers. As of October 2023, 87 of the top 100 academic journals had issued AI usage guidelines.

Conclusion: AI Isn’t the Enemy—Academic Culture Must Shift

During the recent graduation season in China, several universities adopted AIGC detection tools during thesis evaluations. However, these tools often failed to effectively prevent academic misconduct. Services aimed at reducing the “AI score” of a paper have emerged, frequently distorting the original work beyond recognition.

The researchers from Berlin University of Applied Sciences concluded that trying to combat AI misuse solely with detection tools is a losing battle. The real solution lies in shifting the academic focus away from publication quantity and back toward research quality.